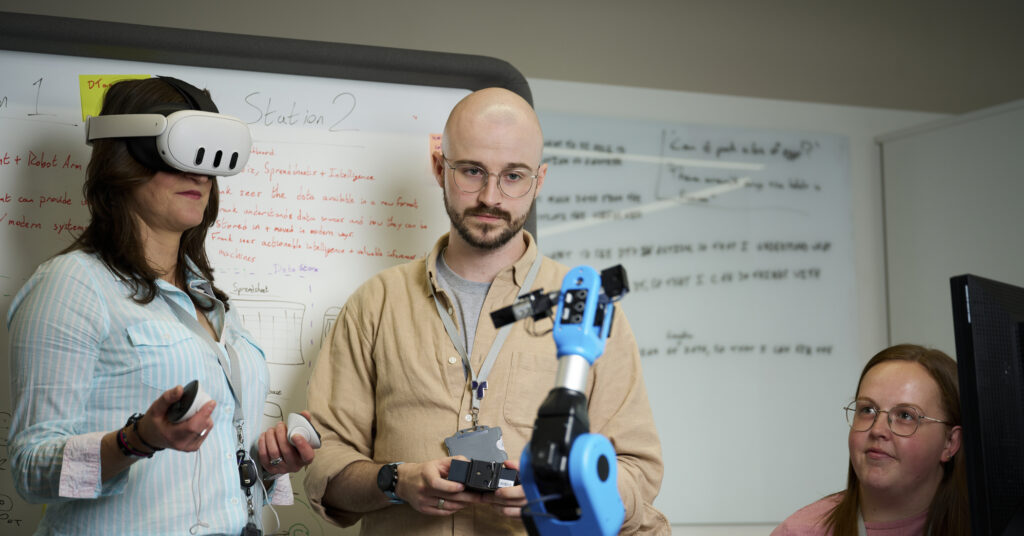

Researcher in Residence Drew MacQuarrie has been exploring VR/AR content production for the last 12 months with Digital Catapult. In this post he explains some of the results of his research and what it means for content producers who want to achieve realistic eye-gaze when creating free-viewpoint volumetric characters.

MacQuarrie has been a virtual reality (VR) researcher at UCL for the last six years, investigating how users engage with immersive content and how experiences using virtual and augmented reality can be made. Full results of his research can be found here.

Free-viewpoint video is a method for creating VR/AR characters

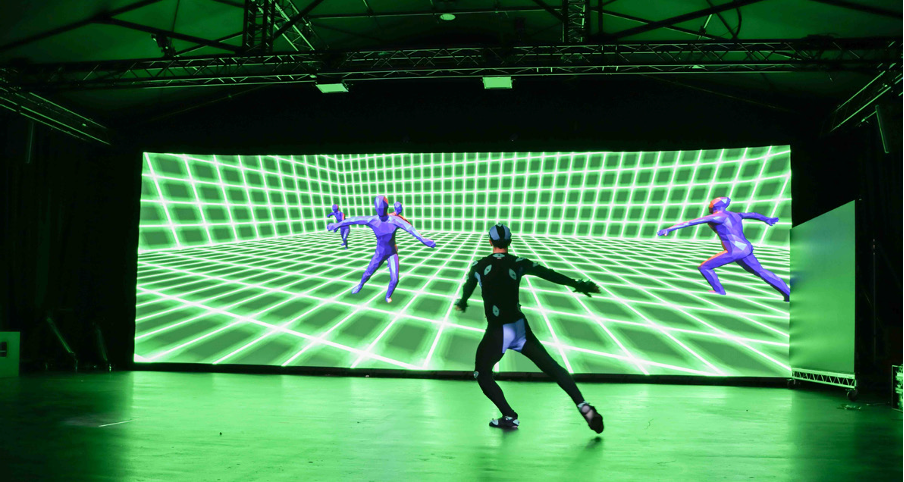

Volumetric capture is essentially content filmed using an array of cameras that supports viewing with six degrees of freedom (the ability to move freely in a 3D space rather than only being able to look from side to side, or up and down). Free-viewpoint video (FVV), a subset of volumetric capture, is a way to create VR/AR characters. Unlike motion capture, which only captures movement, FVV captures both the movement and the surface appearance of a performance. In this way, it’s like capturing a video – except it’s possible to walk around inside. Filming is done in specialist FVV studios, such as the UK’s Dimension Studio, established by Digital Catapult and Microsoft. This allows for the creation of highly realistic characters as video-textured 3D objects. These characters can then be placed into CGI scenery to create near-video-quality VR experiences that support viewing with six degrees of freedom.

FVV is a relatively new technology, and the current generation has some limitations. Firstly, FVV clips play out exactly as they were captured, much like a regular video clip. This limits how interactive the experience can be and creates an issue around where the characters appear to be looking, also known as their eye-gaze direction. If a character addresses the user through a look, and then the user moves, the eye-gaze will no longer be correct and the character may appear to be looking past the user.

Also, where scenes have multiple characters, each one is usually captured separately and the complete scene is assembled in post-production. This can make filming difficult as shots must be planned carefully so that actors stand in precisely the right location and look in the right place to ensure the scene works correctly when pieced together.

To know the on-set tolerance level for eye-gaze, it’s important to understand how good humans are at telling where these FVV characters are looking when viewed in a head-mounted display (HMD). It is also important to understand how far users can move out of the eye-gaze of a character who is addressing them before the experience starts to look wrong. To explore these issues, an experiment was performed that measured how well users could assess the eye-gaze of FVV characters when viewed in a HMD.

How well can humans assess where someone is looking in virtual reality

There have been a number of experiments that explored how well humans can assess the eye-gaze of other humans in the real world. In an additional exploration during this project, an actor was filmed as a FVV character looking towards a number of targets at different angles. These were 0°, i.e. the character was looking straight ahead, and then ±15°, ±30°, ±45°. The actor looked at these angles “naturally”, in that there was some head and some eye movement, i.e. the actor’s eyes didn’t always look straight forwards from head direction.

Wearing a VIVE HMD, 36 participants were asked to assess the gaze of a FVV character. Participants were given a hand controller, which they could use to rotate the FVV character. Participants were asked to rotate the character left and right until they felt it was looking at target. The location of the target was repeatedly varied, so sometimes it was directly between the FVV character and the user, and sometimes it was quite far away. The angle the FVV character was looking also varied. All participants provided an answer for each combination of the character’s eye-gaze directions and the target positions.

People are much less able to assess the eye-gaze direction of FVV characters when viewed using head mounted displays

In the real world, humans can tell where another human is looking with exceptionally high accuracy. Findings indicate that people are much less able to assess the eye-gaze direction of FVV characters when viewed using head mounted displays. This is likely due to the limited resolution that the current generation of HMDs provide. If users are less able to discern the minute details of the iris, this may substantially reduce their ability to assess eye-gaze direction. This is a useful finding, as it means there is more tolerance than might be expected for getting the eye-gaze direction of FVV characters wrong without it being noticed by users.

Another finding was that, in line with real-world results, humans are much better at assessing eye-gaze direction when it is directed towards themselves. People are very good at telling if someone is looking at them, but this precision drops off as the object being looked at gets further away. This also happens when viewing FVV characters in an HMD. In practice, what this means is that it is more important to ensure eye lines are correct in VR or AR content when directed near a user. In fact, accuracy can be sacrificed for eye lines further away from the user to ensure closer ones are correct. For example, it’s possible to rotate a FVV character to improve an eye line near the user, at the expense of an eye line further away.

On average the eye-gaze assessment was misjudged by 10%

In contrast to real-world studies, the average eye-gaze assessment of VR characters viewed in HMDs was consistently wrong. Specifically, participants misjudged the eye-gaze direction as being about 10% closer to themselves than the targets really were. Most likely, this was caused by the well-known phenomenon that users tend to under-perceive distances in VR by around 10%. If this is the case, it means users were correctly assessing the eye-gaze of the FVV character, but were misperceiving the target as being 10% closer to themselves than it really was. In practice, this might have interesting implications for FVV content production. To ensure eye lines appear correct, it may be better to film scenes such that the eye line is 10% closer to the user than is technically right.

What these findings mean for FVV content producers

Surprisingly little is known about the underlying process of how humans interpret eye-gaze direction. As a result, it’s difficult to model how the perception of a character’s eye-gaze direction in HMDs will change as the resolution of VR displays improves. It’s useful to note that the current generation of head mounted displays allow more tolerance for incorrect eye lines than in the real world, but that eye-gaze should be more precise when directed towards the user.

The four major takeaways from the experiment were:

- Filming FVV characters often requires planning eye-gaze directions in advance

- The resolution of the current generation of HMDs means there is more tolerance for error than in the real world

- It’s more important that eye lines are accurate when the gaze is directed near to the user

- Eye gaze direction was consistently underperceived as being 10% closer to the user

Full results of the experiment can be found in this paper, published at IEEE VR 2019. Find out more about immersive content creation from Digital Catapult’s industry-leading CreativeXR and Augmentor programmes.