Hat Trick Labs supported the project with character development and script workshopping, and worked with Meaning Machine on the dialogue API. Target3D was interested in NVIDIA’s Avatar Cloud Engine (ACE) toolkit which helps developers bring digital avatars to life with generative AI, and so brought in help from NVIDIA. Alex Horton, of Horton Design Works, also came on board to help with character design, offering his experience in steering tech teams to create quickly. Finally, Ollie Lindsey from All Seeing Eye acted as system architect.

Five weeks to get demo-ready

The technical challenge was about deciding whether to bolt together off-the-shelf software, adding some middleware and backend, or use the ACE toolkit, which is itself a collection of products. NVIDIA helped secure the accreditation needed to use ACE, but it meant getting accreditation for different products in the kit at different points in the five-week sprint. Given the time constraints, the team decided to do a quick minimum viable product (MVP) and then iterate.

“We called it a sausage factory-type production where we could split out certain parts and swap out with either voice libraries, motion libraries, softwares at different stages, and still be able to actually demonstrate something at the end of it.”

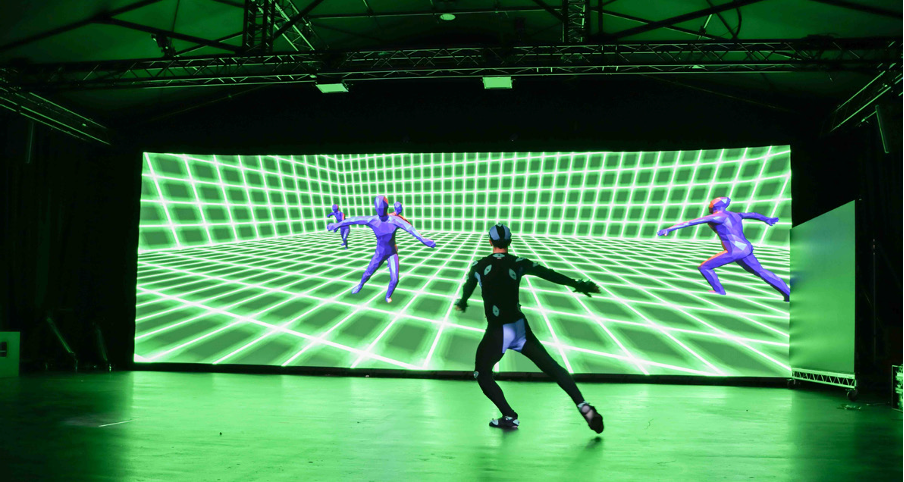

The team developed a process of speech-to-text, text prompt to LLM, LLM response, and then text-to-speech and audio-to-face, with blend-shape animations rendered onto a metahuman. They worked with ChatGPT and NVIDIA’s Riva, using ElevenLabs to synthesise voices. Realising that working on just one PC wasn’t enough, the work was split over two machines until Motion Library was included which necessitated splitting the project over three machines.

In the end the team used Riva for the speech-to-text and Meaning Machine’s middleware to help with characterisation and dialogue, which went up to an LLM. The team used off-the-shelf ElevenLabs voices at first but then had Kerwyn record his voice library. Finally, they used Audio2Face and Unreal Engine – all operating on three very expensive computers. It was quite the journey. “I think I’ve still got the napkins that we drew it all out on originally,” said Dan.

Developing a metahuman in a responsible way

With plenty of experience using mocap and voiceovers, Target3D is not new to the conversation about ethics, copyright, artist management and data protection. It already has robust contracts in place to make sure performers’ likenesses are not exploited in any way. Creating Kai did bring up these issues as it is a composite character built with Kerwyn’s original body scan, a recognisable Unreal Engine character, an off-the-shelf voice library (initially), and motion capture from another performer, Gareth Taylor.

Target3D looked carefully at what that meant in terms of giving people credits, and who would own the performance at the end of it. Casting agencies and production companies that shoot at Target3D’s studios are beginning to add into their contracts with artists that the client should not use the performance name, image, voice, likeness or any other identifying features in connection with development or application of AI and machine learning (ML) technology. Contracts like these are helping everyone navigate the issues. But there remains a large element of trust involved.

Dan commented that there’s currently no real way of understanding if files are left on one production company’s hard drive and another requires motion capture for a project that needs to be delivered very quickly, and someone else decides to use those assets without following the proper protocols. It works on trust for contracts at the moment and it is very hard to find out if that motion has been used or has been slightly manipulated and used again in some way.

The demo at SXSW included a clip of Kai talking about the ethics of creating composite characters: “Who does a character belong to when its features are borrowed from real humans? It’s a bit like making a smoothie from everyone’s favourite fruits – delicious, but you have to make sure everyone’s OK with their fruit going into the blender.”

Controlling a metahuman character’s responses

One of the first things Target3D wanted to work out was how to keep Kai’s answers to a certain level and not let it carry on talking for too long. Meaning Machine and All Seeing Eye worked out ways to get sentences back that would be usable. The character and voice got funnier as the process went on, said Dan.

There were certainly some laughs from the audience at SXSW when Kai was asked a range of questions. “How do you keep your armour so shiny?” he was asked. “Oh, the armour? It’s polished with the sheer absurdity of my existence. A good buffing of existential dread does wonders.”

Working together to go faster

Five weeks is not long to optimise a metahuman. It would usually require at least four weeks just for voice synthesis. A slight delay in Kai’s responses in the demo could partly be attributed to the connection from London to Austin, but Dan acknowledged that there are latencies at every part of the pipeline which, with further development, could be reduced. Integrating NVIDIA’s Riva may also speed things up, as could adjusting how the script is prompted and Target3D will continue to work with Meaning Machine to understand this better.

“It’s going to be really interesting to understand what [the NVIDIA Unified Toolkit] means for being able to expedite a lot of the issues we ran into. And how splitting the different processes across different GPUs and different boxes would help us dive into this much quicker than we did. We spent the first two or three weeks researching and not having committed anything to code. And then the last week or so, right up to last night, has been pretty exciting.”