Capturing reality: Exploring the future of motion capture in immersive content-making

Posted 11 Jun 2019

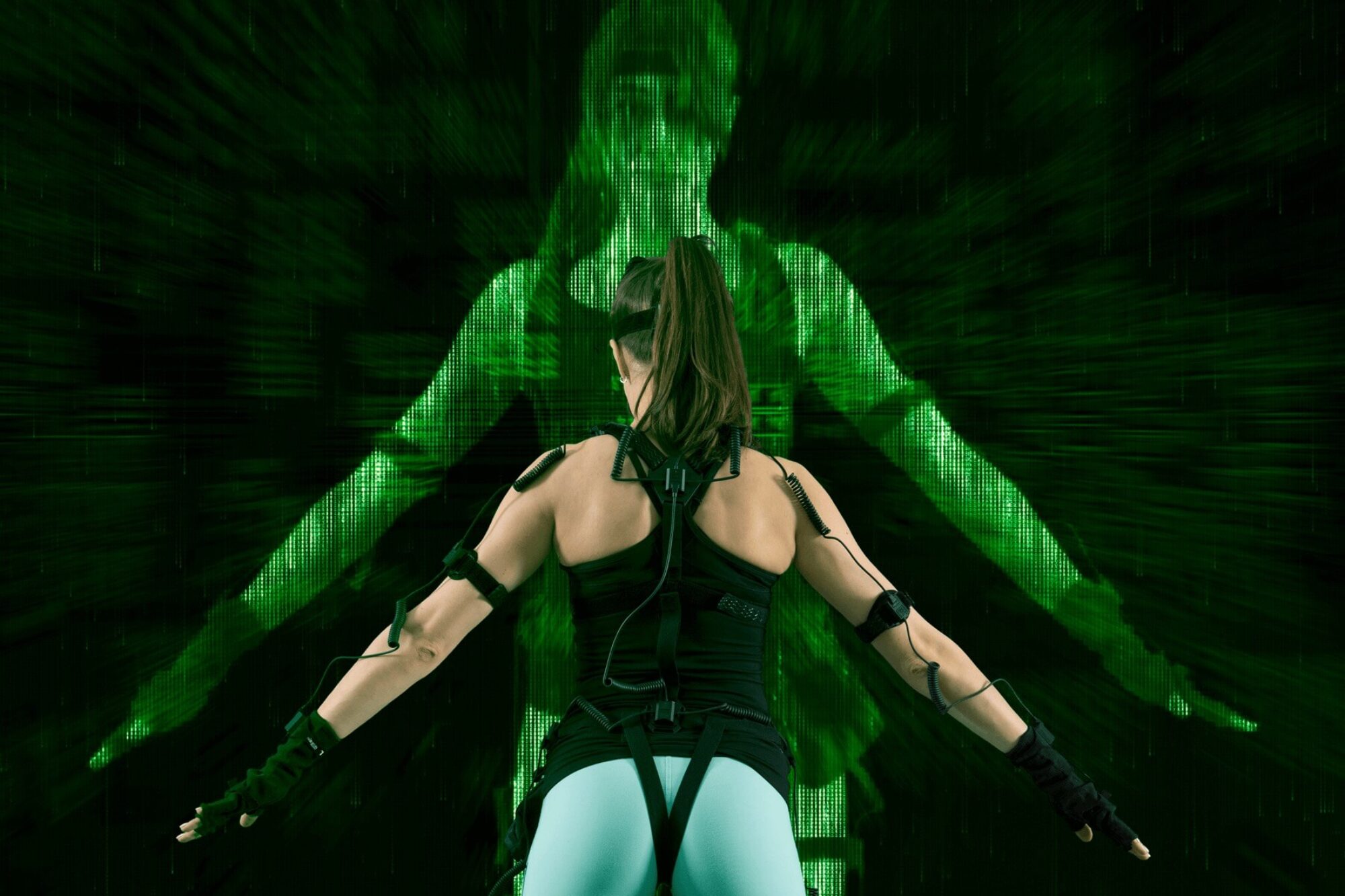

As the variety of high quality motion capture systems available in the market increases, and their cost and complexity to use decreases, the industry is becoming more accessible to those without big budgets and huge technical teams. Digital Catapult brought together 15 companies working across the immersive industry, from VFX specialists and game designers to advertising executives and medical technologists, for a one day workshop on motion capture at Imaginarium Studios in Ealing, allowing these companies a unique opportunity to share knowledge and explore the challenges of using motion capture, with a particular focus on delivering content for virtual and augmented reality.

The following paragraphs take us through what happened on the day, describing the state-of-the-art demonstrations, detailing some of the key learnings from the group discussions and analysing how to capture motion specifically for immersive viewing. Look out for similar immersive workshops coming in the future from Digital Catapult in collaboration with its partners.

Demonstrations

The cutting edge technology available at the Imaginarium Studios showed how motion capture can be mixed with real life performance to produce incredible finished projects such as Coldplay’s ‘Adventure of a Lifetime’, a music video born from a chance meeting between Imaginarium-founder and Mocap Master Andy Serkis and Coldplay’s Chris Martin whilst on a flight from LA.

Imaginarium’s CEO Matt Brown also showed the results of the studios’ groundbreaking work to capture the West End musical ‘The Grinning Man’. The experience, displayed on a Magic Leap One, allowed attendees to view a scene from the production involving up to 16 actors on stage at any one time, fully realised in 360 degrees on the mixed reality headset.

As an example of low cost motion capture systems, the University of Portsmouth took attendees through each step of the deployment of their live location-based performance capture theatre piece ‘Fatherland’ as they were setting up in real time. This piece, created with Limbik Theatre, was one of five projects from Digital Catapult’s CreativeXR 2018 cohort to receive additional funding to complete a prototype. The system used to create ‘Fatherland’ uses a combination of an HTC Vive headset and Vive trackers driven by Ikinema Orion and streamed into Unreal Engine. This makes it possible for an audience member to don the headset and join a tracker-clad actor on stage, interacting with them in real-time as the story unfolds. The audience can see both the headset point-of-view on large screens and the real life movement of the actor performing the story around the volunteer. Although this is a low cost version of motion capture, compared to Hollywood level budgets, it demonstrates how with the technology and some imagination, something unique which pushes boundaries can be created.

Group Discussions

The day included two sets of group discussions, each split into either a technical or business focus. For the technical group, the majority of attendees had at least some experience developing and deploying motion capture, ranging from low cost Perception Neuron systems and Vive trackers to the world leading technology available at Imaginarium. These knowledge-sharing sessions provoked in-depth discussions about the challenge of balancing budgets with ambition for high production values, with the conversation often turning to how to get the maximum potential out of lower-cost hardware.

There are various advantages and disadvantages of motion capture equipment offering different levels of value for money. Broadly speaking, motion capture can be split into optical and non-optical solutions.

Optical systems track markers or movement via a fixed array of external cameras surrounding a volume. This is accurate when functioning correctly but can’t track occluded motion. It is also relies on setting up a defined space for capture.

Non-optical systems track movement with inertial, mechanical or magnetic sensors, making them susceptible to global drift but able to function in almost any environment and without line of sight.

Interest from the attendees was mostly split between live location-based performances and recorded studio work and each use case presented its own set of production and business challenges. It became clear that there is rarely a perfect solution. Some of the challenges brought up on the day included handling occlusion for multi-user motion capture; the intricacies of hand and facial tracking; and the reliability, portability, and robustness of location-based capture in different environments (such as theatres, which are full of metal that can interfere with wireless signals).

The Imaginarium team stressed the importance of readiness from a pre-production standpoint, focusing on the work that happens pre-shoot and the intricacies that go into making an amazing piece of motion capture before anyone thinks about donning the suits. A good understanding of actors’ experiences and backgrounds is crucial, with different fields (such as theatre and dance) typically lending themselves more to motion capture work. Giving actors time to assimilate to the motion capture suit is vitally important; it is very much a different type of acting, and allowing the actors suitable time to prepare will bring out a better performance. Casting the best person for the role is also important – it has to be someone who feels comfortable in, what for many, is a strange form of acting, someone who can bring the right physicality to the piece and someone who can embody the character and bring them to life.

The Imaginarium team went through their usual plan pre-production pipeline, starting with breaking down the end goal for the shoot. Having a clear focus was key, and although a certain amount of creativity can happen on the day keeping a goal in mind focuses energies into the final product. The team works through the script, looking at the length of scenes and breaking them into sections shorter than five minutes to prevent massive file sizes. One stand-out moment was a discussion around transitions between scenes, or cuts within a scene. It’s something we take for granted when watching film or television, but the flow of a scene is incredibly important and how those pieces work together can make or break a piece of content.

Readiness was, unsurprisingly, also a point of discussion for the technical team. Plenty of time and money can be saved by preparing as much as possible before anything gets captured. For example, using simple props help to match the motion to the virtual output, such as a ‘see-through’ guitar (made up of only the frame so suit markers remain visible) or by using short, crutch-like arm extensions when mimicking the movements of an ape.

Having prepared animation rigs (a ‘skeleton’ to pin the capture to) allows motion capture to be attached to the target model and assessed on the day. The entire scene is captured in three dimensions in the motion capture volume, making it easier to focus more on performance during capture and less on framing and lighting. Standard video footage is also captured, providing a reference of the real world movements that informed the digital output. Treating motion capture shoots with the same respect as old-school filmmaking minimises expensive ‘fix it in post’ alterations.

Motion capture for immersive

For the final step, displaying the content, the workshop had a particular focus on delivering via immersive devices. There are technical challenges to understand the pipeline when developing for such devices, although game engines such as Unity and Unreal help with this. Narratively, there is the agency of the viewer to contend with, and things can’t be hidden or cut frequently when the viewer becomes the storyteller. There needs to be a sense of purpose for the viewer, through allowing them to directly interact with the content or more passively gaze at varying locations for example. Focus and readiness in preparing for capturing content via mo-cap becomes all the more important when the audience holds the camera: this point was stressed over and over again as there is nowhere to hide imperfections when content can be viewed from any angle.

Since ‘Star Wars: Episode One – The Phantom Menace’ first captured Jar Jar Binks as a full feature-length motion captured character in 1999, the technology has progressed leaps and bounds. It has become cheaper and more refined; R&D is ongoing, and intricate hand tracking and facial capture is improving alongside smoother production pipelines and increased reliability. With motion capture now being used for live performances, beamed into VR headsets and capturing fully realised theatre scenes forever in a Magic Leap, the relationship between the immersive community and motion capture technology has only just begun.